The following block describes the automatic adaptation of a speech recognition engine to different approach areas by unsupervised learning from surveillance data and voice recordings.

The HAAWAII project aims to research and develop a reliable, error resilient and adaptable solution to automatically transcribe voice commands issued by both air-traffic controllers and pilots. To develop new models, large audio data with corresponding transcription is required. The ANSPs of Icelandic en-route and London TMA (Terminal Manoeuvring Area) collect the audio data of the controllers and the pilots.

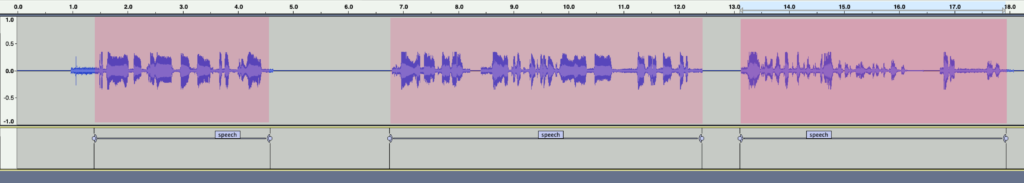

In order to process the large amount of voice data for building machine learning models, it is important to first transcribe and annotate the commands in the most efficient way possible. The first step in processing the audio data is to run automatic segmentation to classify for speech and non-speech parts in the audio and then to assign the speaker (ATCO or pilot). Decision for detecting speech/non-speech frames is based on index of maximum output posterior. Majority voting and logistic regression are applied to fuse the language-dependent decisions. In addition, using a small development set in logistic regression method, further improves the performance of the speech activity detection (SAD) system [1]. Below images show an example of the obtained audio data and its segmented output. The first image shows the wav signal. The second one shows that three segments have been detected.

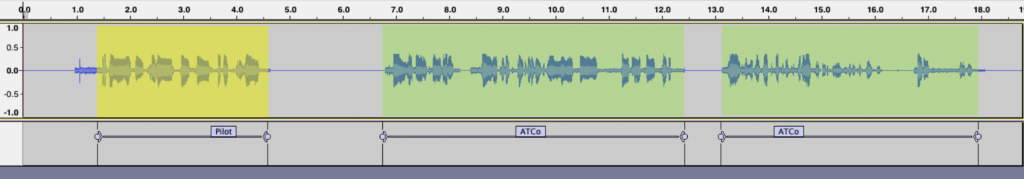

Once an audio file is segmented to speech and non-speech, the next step is to obtain the speaker labels (ATCo or pilot) for the speech segments. These labels are generated by running the speaker diarization system. The current system uses the Information Bottleneck (IB) approach [2] which assigns different speakers for an audio by optimizing the clusters of feature vectors with respect to a set of relevance variables. Figure 3 shows an illustration of the generated speaker labels for the segmented speech.

The next step is to manually verify and correct the automatic speech and speaker segmented data. This step is required in order to boost the performance of the automatic speech recognition (ASR) system. Once this is done, a first set of automatic transcriptions is obtained from the ASR system. The automatic transcribed data is then again manually checked and corrected if needed. The next step is the automatic annotation which extracts the ATC semantics from the transcriptions, followed by a manual checking and correction process. The manual steps are tool supported and performed by the participating ANSPs in the HAAWAII project, Transcriptions and annotations are used to update/improve the performance of the resulting ABSR system by machine learning. When roughly twenty hours of manual data are available the manual steps are not needed any more. ABSR improvement by adding more and more data is done automatically, i.e. unsupervised learning is used. Compared to other unsupervised approaches, however, the approach used in the HAAWAII project, relies also on using context from the available surveillance data. This enables to classify the automatic transcriptions and annotations into good and bad learning data.

References:

[1] Sarfjoo, Seyyed Saeed and Madikeri, Srikanth and Motlicek, Petr “Speech Activity Detection Based on Multilingual Speech Recognition System”, 2020

[2] Madikeri, Srikanth and Himawan, Ivan and Motlicek, Petr and Ferras, Marc “Integrating online i-vector extractor with information bottleneck based speaker diarization system”, Sixteenth Annual Conference of the International Speech Communication Association, 2015

Contact information:

Amrutha Prasad: amrutha.prasad@idiap.ch

Dr. Petr Motlicek: petr.motlicek@idiap.ch